Comprehensive Gold Modelling for the OMDB Dataset

Business and Graph perspectives

Background

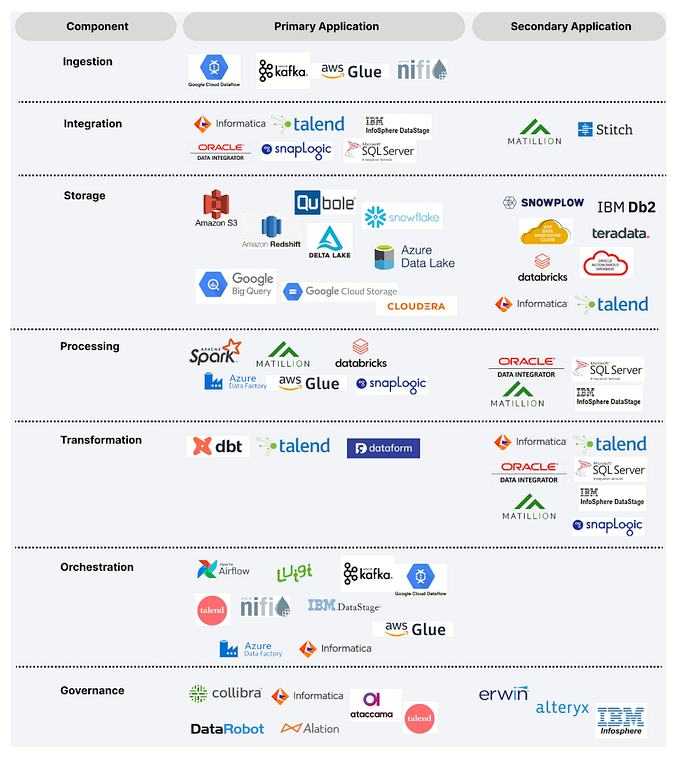

In the previous article Ingesting the OMDB Dataset we analyzed the first layers of the OMDB dataset. The Bronze and the Silver. We described the pathologies of the dataset, the solutions used for data testing it and its general structure. Let’s replicate our Medallion architecture here as our guide to the content of this article

In the present article we are interested in the last layer. We will go over the details for the OMDB dataset. This layer is where analytics are generated and therefore it is very important for business. We will present two treatments of each layer. A table one and a graph one. Both have important subtleties and complement each other. We host all the medallion code in one repository. The graph representations live in another one. We will not use other approaches since it is a full refresh dataset and not an event-based one.

Classic normalized Gold

In this layer tables start to lose their character. But not much. What happens here is

- Scalar seeds, values and foreign keys are absorbed to arrays

- Old fields are replaced with corrected ones

- Tables are renamed to reflect business

- Tables in 1–1 correspondence are merged

- Primary keys get a uniform name across tables

- New tables are created to uniformize concepts

Whatever happens though, we still need data tests. Always test! Lets go these points one by one through examples. For the first case an example is the gold_normalized_cast_model.yml. Here the primary keys of the original one, silver_cleansed_all_casts_model.yml are actually all the keys

- movie_id

- person_id

- job_id

- role

- position

Upon close inspection the last one is an index and it makes no business sense. The first three live in other tables. Role is just a value and nothing else. For this reason we can drop the position and absorb the roles, given a value of the first three keys, to an array. Now the table conveys real business value. For a movie, a job position and a person there is an array of roles.

We can do something similar for languages and regions (see code, or the example in the sixth point). The gain here is that primary keys are more explicit. For the second and third points gold_normalized_content_series.sql is an example.

For the fourth point the image tables (silver_cleansed_image_ids_model.yml and silver_cleansed_image_licenses_model.yml) are in 1–1 correspondence. So we merge them. The same applies to abstracts. The fifth point comes naturally after the cleansing. Series get a series_id instead of an id, parents are renamed to the id of the parent content and so on. The sixth point is the most critical to business. While originally various content types have some common fields, other tables refer to the primary ids of these tables collectively. That is why we used the with_cleansing_all_ids_of_original_movies_and_series, with_cleansing_all_ids_of_series_and_seasons, with_cleansing_all_ids_of_singleton_series tables in the cleansing layer. These tables are artificial and point towards insufficient modelling. The approach we will use is to absorb common fields into a new artificial table (with very real business meaning) called gold_normalized_content.sql.

This table also has the Point 1 aggregations to arrays. Now what remains of the original content tables? Let’s see the movie table.

We observe that the individual content tables, act as facts while the content plays the role of a dimension. But not just a simple dimension. If we did not make the aggregations of foreign keys, we would have a true snowflake schema. Here we still have a snowflake schema, but we have vectorized the foreign keys for economy. In reality, we do not have necessarily a relational store (so Postgres could be swapped for something else) and we can vectorize without regrets.

With the work we have put, we dropped from 61 tables to 38. Still we need to create data tests. Take your time to run and study what is involved in the code base. And now the question to the careful reader. Why did we mapped the gender at the Gold Layer?

Graph normalized Gold in Neo4J

The data-engineering perspective

Here things get really interesting. We create our first graph representation of our normalized Gold Layer. DBT does not yet support Graph Databases, but we can create a model, export and load to a suitable database. Neo4J is a very popular choice with lots of utilities for Graph Data Science. What we need here is to create Node and Edge tables so as to load to Neo4J. Edges are just pairs of primary-foreign keys (some times we need to un-nest) and nodes are !partially! the tables of the gold normalized layer. For this we will use the APOC library of Neo4J to export through a notebook our data to JSON gzipped files. This is necessary since array fields are mangled by Pandas. JSON is unambiguous.

Before we mentioned !partially! mapping of tables to nodes. Lets spend some time on this crucial detail. One of the bigger misconceptions is that Graph Databases can be easily replaced by a relational database. In addition, some relational databases offer recursive queries and so, despite the natural form or recursion in Graph databases, it is possible to achieve similar functionality. We will give an example to clarify why a Graph Database is precious for Data Modelling (in addition to the naturality of recursive queries). Suppose we have these two tables. The order table

and the batch table

Without loss of generality, we assume unique items in each batch of multiplicity 1. What happens here? In the first table the order is primary key. But in the second table both the batch id and item id are the primary key. In a relational database we could just add a referential integrity constraint through batch_id. But in a graph database the batch id leaves the second table and we have the following situation. The rows of the first table become nodes. The items become nodes in their own right. And the referential integrity transforms to edges. Graph wise we have the following transformation

The left table is what most people think mistakenly in this case for Graph Databases. While the above approach works when the second table has a non-composite primary key, it fails in the presence of one. We lose the correlation between the keys. Consequently, the case of a composite key cannot be tackled this way. Instead we generate a unique key for each entry of the original batch table and remove the keys composing to a primary one. We have two options, either keep it in the node and have the other table linking to it, tag with this key the edges if we decide to proceed as above. Take your time to understand the two cases. We selected the first option (see gold_normalized_cast_model.yml) because we do not have to search in the edges. Another equally significant difference, is that we need to keep the fact tables for the various content types when we talk about a table based Gold Layer. In the Graph setting, this is not necessary if we use Neo4J because a node can have many labels. So we can cheat, and label the content nodes with the content type too and the fact table edges can be transferred to the content table. No need for specific content type nodes any more. A big win, not possible in the table setting. Returning back to the multiplicity assumption, we can also label the edges with the multiplicity and this is why we did not lose ingenerality.

Quick run through the code

See the repo for more details. The export process is just a simple pandas read/export

We compress the output but also we need the orient=’records’ to export row-by-row in json. For putting the extra labels we just create a cypher query

How about nodes and edges. We will use a simple example, namely the person.

Here we call APOC to load a json array and cast birthday/deathday correctly. Above we made them strings because json does not have a native date type. We then index it

and we can link it to a cast

Everything happens correctly and performantly through the magic of Neo4J indexes. Visualization for categoriesis very helpful in Neo4J.

Business Gold

While the previous normalized gold layer is good for analytics, information is still scattered, albeit uniformly, across tables. This is where the business Gold Layer can help. Here the domain is modelled in a 1–1 correspondence. While previously information could be seen as relevant clusters of tables, now the tables are out of the picture and a replaced by objects. This is a de-normalized representation. The denormalization can happen at various levels but we are not interested in deeply nested structures since it will make our queries cumbersome. The trick here is to collect relevant information around a table as arrays of structs. Still we need data tests (provided in the codebase). Let’s see an example.

Here we merge the names of the categories to the main category table in order to create the category business object. For this to work we need an init script that creates the various types and runs with docker if the pgdata folder is empty (in other words, at the very first start, when you nuke the database).

Having done the merges here are the business objects that map the domain

20 tables are compressed semantically to 7. We take here advantage that the content always has a parent and put appropriate tests.

So now, we can have fast analytical queries on business objects. Still there are references. This leads us to the next section.

Graph normalized Gold in Kuzu

Here our job is easier than Neo4J since we have less objects to add to our database. Previously we had 15 nodes and 20 edges. Now we have 12 edges and 7 nodes. Significant reduction. Kuzu has support for STRUCTs as opposed to Neo4J. Also it still can import JSON files and this is what we can extract from PostgreSQL directly. Pandas does not help us here. Kuzu make the process painless for edges if you export them in from/to format through the JSON plugin.

The good thing is that in our business layer we have simple primary keys with the exception of cast business object. Instead of hashing the composite key to a numeric key, we take advantage that the keys are numerical and join them as strings with underscores. Now our data has simple primary keys something that is compatible with Kuzu which requires simple keys for performance reasons. Unfortunately we do not have multiple labels at our disposal due to the way Kuzu works and this is why we need the content_type field in content business object. Still the category business object, despite the normalization, contains self-references. This is not very easy to handle in the Gold Business Layer because of the natural tree like form of categories. A big win for its graph representation. Not only that, we do not need to carry root_id or parent_id fields anymore. They are encoded in the relationships. Here are the details for Kuzu. The node

the root and parent relationships (no need to specify any primary keys because we are in from/to mode, it can’t get more convenient than this)

and the whole graph.

Conclusion

In this article (fancier than the last one) we did a comprehensive modeling of the OMDB dataset that we started in a previous article. It entailed a lot of work because there was an attempt to expose the difficult subject of Gold Modelling as pedagogically as possible. I hope you find something useful here. We went through the classic and business modelling. We also presented the graph view of these models which adds much to the picture. As always the code is available to study run and modify for your purposes. If you find a bug, or suggest an improvement feel free to add at the comments. As an interesting exercise you can do the same for the IMDB dataset.