Exploring the IMDB dataset with DBT

Writing data tests for a (mighty) real dataset

Introduction

(Disclaimer: This article differs from my typical infrastructure articles like this one I made for the company I work for. Still, it is modern data engineering of tutorial nature).

I am a fan of movies usually SciFi and SciFi/Horror. My preference is on the 80s/70s and early 90s. I believe they are the best years of film production (and dance music production). Usually the runtime of a movie is between 85 to 95 minutes. However for me it is a little longer. People usually take a break to make some pop-corn or to check their email when they watch the movie. But others, me included, head over to IMDB to learn about the cast, what other movies they have played since or before that specific movie, what is their personal life. What this starts as an information retrieval exercise, soon becomes a random walk in a graph because you see other movies they played and you notice other stars in that movies. Now you start scanning their lives. The process repeats recursively (some backtracking is also involved). It takes time to realise that wandering around takes you too far. This is interrupted by you remembering to watch the rest of the movie (or you waking up on the keyboard and realise that it is not your bed). The biggest question is whether all this information is available somewhere to be used for Machine Learning, Data Analytics or even Data Engineering experimentation. It turns out that there is such a dataset. It is a set of dumps of tables that can serve the above purpose. They are reduced and non-commercial. I was lucky to find it and at some point I wanted to explore it. But then I only knew the standard machine learning approach of using pandas or tibbles to work with a dataset. What happens when the data are too much to be processed in your RAM? While this dataset can fit in a huge RAM (8GB), still if it outgrows it, what is the best approach? Fortunately Apache Spark and Trino have solved this issue for us. But this is not enough. They tackle only the processing and storage problem. The Data Quality Assurance (DQA) part is in many cases a custom thing that uses these processing engines to get compute KPIs and some Python or Scala library.

The good old Warehousing architecture

The last decade has seen unprecedented increase in storage and processing capacity thanks to the “Big Cloud” platforms that offer though their blob stores petabyte level storage capacities and through big server farms, peta-flop level processing power. What happened in the past was that when reporting was necessary for e.g. bank transactions, data were queried from the system that hosted the transactions, they were filtered and aggregated (in order to reduce volume and keep what made Business Intelligence (BI) sense) and stored in an other system for BI reporting. The reporting queries could also filter and aggregate to further reduce data in order to fit a specific “view” of the system.

This transition from system to system was lossy, for storage and computational performance reasons. It is known as ETL.

ELT and the Medallion architecture

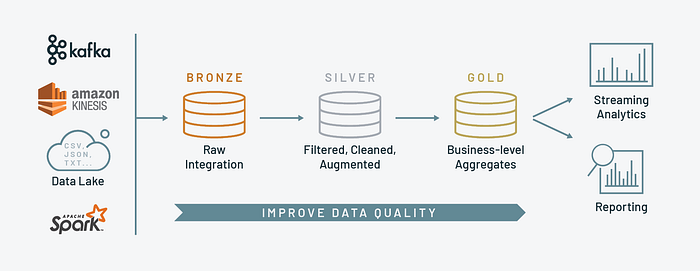

But compression as we said is lossy and it does not allow for flexibility. This is where ELT can help and was enabled by the Big Data processing systems we mentioned before. Here we just copy over everything to a Data Lake (our Landing Layer), add a subset of them to the processing system. This copy (and its representation as a data source for computations) forms the Bronze Layer. Subsequent transformations can take the data to the Staging Area (which casts data to data types that make business sense) and then to the Silver Layer where the data have good quality for BI and represents fragments of information. In order to enable BI over Business Objects (capturing the Domain) and not only “data”, a further layer the Gold forms tables or views by combining data over the scattered information in Silver Layer. All of this is done through queries which were not possible between different database systems, in other words we have inter-DB queries and views. In Databricks all these layers exist in the same system called Lakehouse. The separation of the various layers is called the Medallion architecture.

While the Databricks one (see above figure) is the most famous, I think the one presented here is making even more sense.

Transforming with DBT

While we talked about the ELT and explained the EL part we were fuzzy about the Transform part. DBT enters the picture typically after the “Bronze Layer”. DBT actually can be used to do the transformations for Staging Area, Silver and Bronze Layer (and of course Gold Layer). It is a very clever application of Jinja templating to refactor SQL queries that lead from one layer to the other. It also adds Data Unit Testing which tests data for pathogenies. These can be e.g. an actor that exists in a movies table as an ID(entifier) but this ID is unknown to the actors table. Or a movie produced in 200AD (rest assured that it is a data error and not a proof of time travel or UFOs). This way you can go back to the sources and correct them, ignore them or something else. But you need tests for two reasons. The first reason is the inadequacy of database types furthering constrain the shape of data. The missing actor error above is a manifestation of a referential integrity error. The second reason is again the inadequacy of database types. This inadequacy is of different nature as the “time travel” error above revealed. In the first case, we need other rules to strengthen the typing, these rules expressed as data tests are called type constraints (related to business logic). The second case has to do with business rules. These are the business constraints which are rules expressed by data tests too. In any case, finding the issues (so as to correct them) is done through tests. Sometimes, we also need cleansed tables for the next level until the issues in the data are fixed. DBT offers these functionalities (and more). For a very basic introduction you need the official documentation and possibly this. The present article is the next step.

Introducing our approach.

In previous articles I used Docker and Co to introduce pipelines. Here the purpose is not to make an end to end pipeline but instead focus on the data testing part. The reason is that the dataset is not very small. Fortunately we have a suite (DBT) that allows convenient construction of data tests and tables or views. They are non-trivial parts as you will see (or experience by following the code and the article). So instead of using a process to grab files and move it to a “Bronze Layer”, we take the “Bronze Layer” as a given and the natural format of the files to be its natural format, gzip compressed TSV files. Who said they have to be Delta tables after all!!!! We will not use docker, so as to minimise complexities or performance issues. Also we will not use a “cloud friendly” database or a relational store like PostgreSQL. But we definitely need an OLAP store. Since we will run it locally, DuckDB is a perfect match. It will emulate our Big Data processing system and is seamlessly supported by DBT. For this reason we take a parent folder called “imdb_files” hosting our downloaded files as a “Bronze Layer”. If you need to change folder, change it here:

As you can see, DuckDB allows us to keep our files compressed and specify a set of CSV parameters, like compression and seaparator. We ensure that the input is typed as VARCHAR fields. After all it is a CSV. Contrary to what software engineers do, we do not need a fancy ORM here. Even if we integrate our PostgreSQL with the site that hosts these data, we will unzip them and store them as a table with VARCHAR entries. The rest are the work of DBT. The ORM is there to store and retrieve business objects for the services and for specific tables for specific databases . Our pipeline will produce tables that span multiple databases for reporting and business objects that model the whole business (equally important).

Describing the IMDB dataset

Just blindly getting the data of the IMDB dataset into views and writing tests is not the most fruitful approach. We need to first get what piece of information we can from the distributor and run some initial exploration, at least at the level of finding the correct types of the dataset to populate our casting process. In this specific case we are lucky enough to have some description of the various fields and some hints about the types. The first hint is that ‘\N’ is used as a NULL in our fields. As we said we get everything as a VARCHAR initially. So our first task is to convert all ‘\N’ to NULLs. So, when we create our DBT model we need to first create a NULL corrected view of our data. While we should normally do it for only the fields we have this information, it is soon obvious that this is not a good strategy. Lets use our duckdb (we make the assumption that you have installed it somehow) to run across our first pathogeny.

Enter the “imdb_files” folder where the CSVs reside and run

duckdb

Inside duckdb we run

This is definitely not empty.

In my instance it says 12354111 entries. While deathYear can be nullable, the birthYear can have empty fields too even if it is not mentioned.

There are also fields that are lists. One field is also a list but the dataset says nothing about it. The non-declared list is the characters field (yes, we suspected that from the name) in the title.principals.tsv.gz file. It should have been a list of strings and not a string in the description. But even for the other list fields, where we expect to be a string with comma separated values, we soon learn that this is not the case!!!!

Let’s explore with our duckdb the title.akas.tsv.tgz file and try to verify that the types field is an enum where fields are strings separated with comma

As you can see names are totally messed. There is a folder in the codebase called “notebooks” where we have a python notebook for this dataset. The analysis included finds out that types and attributes fields have ‘\x02’ as a separator. We found this, and the rest of the cases by data tests (thank you DBT). The more familiar you become with data tests and exploration of a dataset, the more you “see” some patterns forming.

Seeding DBT

For writing data tests DBT needs constants. These exist in DBT in the form of small tables. These can host for example all the countries, all the languages or a number of constants like that we found above. The seeds that are mentioned in the documentation of the dataset are

- seeds/countries_with_regional_codes.csv

- seeds/distribution_types.csv

- seeds/language_codes.csv

The first is taken from this repo and we renamed “alpha-2” field to “alpha2”. This is what we are interested. The second is constructed from the documentation of title.akas.tsv.tgz . As it is mentioned, it can change without notice. But we use the seed to form a data test, in order to capture errors that can appear without notice too! The third one is from Datahub. The distribution_types is not necessarily a seed. We could test the fields with the “accepted values strategy”

However the field hosting this value is an array. And so we exported them to a seed file and written a custom vectorized referential test.

Writing the tests and cleansing

The dbt testing documentation or something along the lines of this Advanced testing in dbt tutorial can be used as an necessary introduction as we go beyond basics. Here we apply this knowledge to create tests for our models. We focus on type constraints (data tests). We will use the title basics model. First we need to create our bronze model

As you can see, we create a view (you can create a table for performance). Then we create a view built upon the previous view for the Staging Area (called Intermediate Layer in our code base).

The Bronze Layer is the aforementioned NULL correction location. The Intermediate Layer is the type casting location. There is one caveat in the case of name_basics_model_cated.sql

We use TRY_CAST so as to not fail early. Strictly this is a cleansing operation, but we make the compromise that non-integer values are null values. If we blindly casted we would have failed very early in our pipeline.

So now we have a view of the table with correct types. As we said previously, types are not enough. So we add data tests that complement the types. Tests would fail immediately in the Intermediate Layer, so we make a set of cleansing operations to provide the Silver Layer. This is the one that is tested for data quality.

As you can see our first test is about genres

Because of dbt_utils limitations we apply it on the the model (and not the specific column). This knowledge is a codified version of the dataset documentation. Another very typical test is that tconst is a primary key

or that the region in title_akas is an enumeration.

and the field titleId field may be coming from another table.

Things to keep in mind:

- Never forget to add documentation to columns

- Delegate some of the documentation to tests

- Things not tested are things that are not guaranteed to hold.

For the above, uniqueness of the ordering field is not tested. So, it does not hold (feel free to see what happens if you enable the test). But there are also type constraints that are misleading and have to be found with experimentation like the combination of columns above.

For each field misled by documentation I wrote uniqueness tests. Of course they failed and I had to resort to careful reading + experimentation to uncover the hidden type constraint. This should have been explicit. Run the tests, modify them to fail and read them carefully. There is a lot of type constraints not covered in the documentation that leads to type constraints.

Finally some fields are arrays and the elements of the arrays are foreign keys. For this one we wrote a specific generic assertion:

Some of the tables still had test failures even after following the documentation. For example there are directors used that do not correspond to the name_basics_model. For these cases we created a cleansed model that do not exhibit these pathogenies. Here is an example.

In order to make the transition to the Silver Layer, we need business constraints (new data tests) and possible cleansing (including imputation).

Data profiling

We should definitely include a report about the statistics of the columns of the various tables. A business person can have a quick look to see whether this makes sense and any tests need to be included. We include here 2 kinds of data profiling (the first was used above to uncover seeds).

jupyslq

We use jupysql to view our Duckdb tables and profile them through a jupyter notebook. Execute the “data_profiling.ipynb” notebook to see an example. Here is a report for our title_principals_model table.

It seems that this report is intolerant to NULLs. Also as you can see in the notebook it also cannot unnest columns.

Soda core

Soda offers a cloud platform for data quality (like DBT) but offers an open core. We will not go deep in this. You can read excellent Medium articles (related to duckbd) or the official documentation. Here we just create the bare minimum to create our data profiling reports. We need a configuration and a checks file

Now, having installed the dependencies from the “requirements.txt” file, we just execute the profiling.

soda scan -d imdb_dataset -c configuration.yaml checks.yaml -srf soda_scan.jsonSoda reports some issues with boolean columns as they are not supported. Either create a view with these cast to 0/1 or use jupysql. A report, is included as “soda_scan.json”. Load it to a json editor to see the reports.

Keep in mind data profiling has big computational requirements and this is why it is preferable to run it on cloud.

Conclusion

In this tutorial article we took the challenge of analysing the IMDB non-commercial dataset. We also made our first step in writing a complete data testing suite, especially for the type constraints kind of testing. We found various pathogenies and we showed ways to detect them and cleanse them. We introduced data profiling of the IMDB dataset with two different ways as a first step towards requesting business constraints by the product people by submiting a report for review. As always the code is provided . Feel free to dowload, modify and execute the code towards learning DBT data testing. Now, you have no obstacles to use this very useful dataset for your experiments. Writing this article was an aspiring experience for me and improved my understanding of the material. I hope you enjoyed this too.

UPDATE!!!! Code snippets brough to their latest versions, text cleaned and added fresh reports.